Data Center Cooling Technologies

Heat is a very good thing, if you happen to live in Fargo, North Dakota, Duluth or elsewhere on the Canadian border during the dead of winter. But even in those frigid areas, heat can cause quite a bit of damage to an organization’s data center deployment. In addition to high temperatures, improper humidity levels can also increase operational risk, accelerate equipment failure and even lead to fires.1

There are multiple methods available to help data center operators maintain proper temperature and humidity levels in facilities. In addition, there are new methods that allow data center managers to choose the most effective method for their environment.

The various methods of data center cooling share a straightforward strategy: remove excessive heat from the IT equipment and surrounding data center area, thereby reducing the potential for damage to equipment and downtime. Let’s take a look at several proven approaches.

Stay Chill with Deployment Visibility

It should go without saying that your data center staff must be able to monitor environmental conditions of deployments in order to effectively manage them.

CoreSite’s Customer Service Delivery Platform includes a component called CoreINSITE® that provides near real-time reporting of data center environmental information including power consumption and circuit data as well as temperature and humidity readings.

Mechanical Cooling

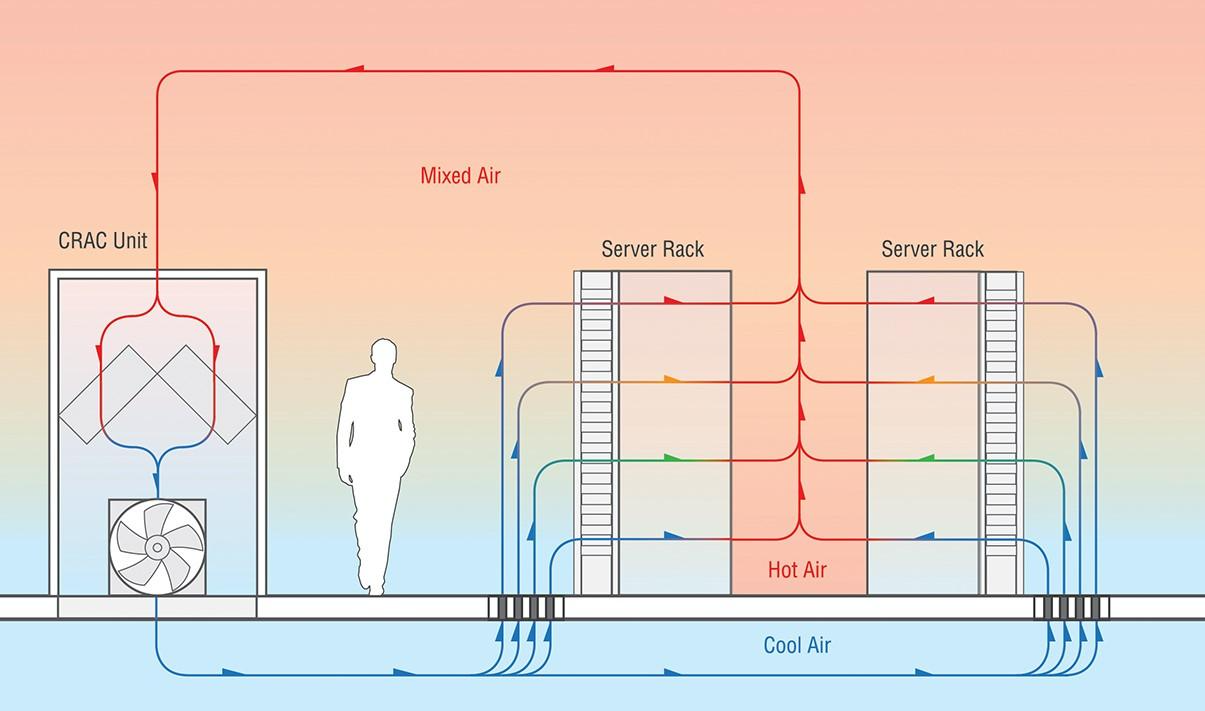

Air cooling uses computer room air conditioners (CRAC) or computer room air handlers (CRAH) to supply cold air and remove heat from data center server rooms. This is similar to how residences and commercial buildings are cooled. Both CRAH and CRAC utilize a cooling medium (refrigerant or water, typically) inside a coil to extract heat from the air and transfer it outside, most commonly to external condensers, chillers and cooling towers.

Free Cooling

Free cooling is an alternative to mechanical cooling. With free cooling, large air handlers employing fans and filters draw in and circulate outside air, while return fans pull hot air out of the facility.

Many chiller plants, especially those in cooler climates, leverage water side economization as a means of free cooling. In this scenario, when outside conditions are appropriate, condenser water and chiller water exchange heat, typically in a plate and frame heat exchanger, bypassing the mechanical refrigeration cycle that occurs within a chiller. This allows the heat from the data center return chilled water to be rejected to the atmosphere with much less energy use.

Liquid Cooling

Because liquid is more efficient than air in transferring heat, it can be more effective than air cooling. It also can support greater server densities and high-power chips that generate a lot of heat. There are two main types of liquid cooling:

- Liquid immersion cooling places an entire electrical device into dielectric fluid in a closed system. The fluid absorbs the heat emitted by the device and rejects it to another media via a heat exchanger (typically water). In sealed, two-phase immersion cooling, the server boils the fluid, turning it into vapor. The gas is then cooled and condensed using water-cooled heat exchangers, allowing the cycle to continue.

- The other method is direct-to-chip liquid cooling, which brings nonflammable dielectric fluid directly to the highest heat-generating components of the server, the processing chip or motherboard such as a CPU or GPU. The fluid absorbs the heat and converts it into vapor. The gas is then carried away to be condensed and sent back to the chip as a cold fluid.

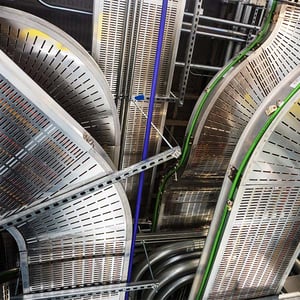

Raised Floor Plenums

Raised floor cooling is very typical of colocation environments due to its extreme reliability and flexibility. The underfloor plenum is pressurized with cold air via air handlers. Perforated tiles are installed in front of server cabinets to allow the cold air to escape the pressurized plenum and be drawn into the IT equipment. There are various sizes of perforated tiles to allow more or less air to reach the server cabinets as needed, based on densities and CFM (cubic foot/minute) requirements.

Hot and Cold Aisle Layouts

In a typical air-cooled computer room, server cabinets and racks are arranged in a row pattern. This equipment configuration creates alternating aisles of hot and cold air. In a slab floor room, cold air is directed down the cold aisle to reach the server intakes. Hot air ejects from the back of servers and is drawn into the CRAC/CRAH returns to reach the coils and transfer heat out of the room. In a raised floor environment, the previously mentioned perforated tiles supply cold air to the cold aisles. Again, the heat is rejected from the servers to the hot aisle, then back to the CRAC/CRAH. Cold air from CRAC or CRAH flows in the space below the raised floor. Perforated tiles provide a means for the cold air to leave the plenum and enter the main space, ideally in front of server intakes. After passing through the server, the heated air is returned to the CRAC/CRAH to be cooled, usually after mixing with the cold air.

Many best practices, such as installing blanking panels, cabinet side panels, hot or cold aisle containment, and simply ensuring proper server orientation, are essential to a successful hot/cold aisle environment.

New ASHRAE Cooling Guidelines

The solution to maintaining optimal environments in data centers, though, isn’t simply to cool them to the temperature of high-tech meat lockers. Although the conventional wisdom used to be to keep the temperature as low as 55 degrees Fahrenheit (F), research has led to new data center cooling best practices.

The American Society of Heating, Refrigerating and Air-Conditioning Engineers (ASHRAE) develops and publishes temperature and humidity recommendations for data centers. In its most recent set of guidelines, ASHRAE recommends data center temperatures in the range of 64.4 to 80.6 F and humidity at 60 percent.2

This recommended increase in data center temperatures is aligned with CoreSite’s standard environmental SLAs. Keep in mind that data center cooling systems can account for as much as 50% of data center energy use. So, making temperature adjustments can substantially reduce energy consumption while still protecting IT equipment and preventing downtime.

As a matter of fact, we recently developed a “Dos and Don’ts for Customers” checklist/guide. It’s a list of best practices to help you understand your role in maximizing cooling and energy efficiency in your cabinet and cages. After all, we’re in this deployment together!

You can get a copy of the guide here.

New methods for cooling data centers are always emerging. For example, geothermal cooling, which has become popular in the construction of some personal residences, can also be used in data centers. Geothermal cooling takes advantage of the fact that the temperature of the Earth below surface level is near-constant, as opposed to the seasonal temperature variations above ground. The cool, stable temperature of the earth is leveraged for heat transfer as the cooling medium is pumped to underground heat exchangers. Heat is transferred out of the cooling medium into the ground and the now cool again medium (typically water) is sent back to the computer room to be reheated.

To discuss your unique data center requirements and learn more about what CoreSite is doing to innovate data center cooling, contact us today.