After decades of relative obscurity, data centers are getting a lot of attention. You can credit artificial intelligence, specifically generative AI, for the limelight. Of course, with fame comes challenges. For data centers, that means justifying the energy provided to their customers to implement AI and thousands of other services enabled by data centers while being mindful of the impact on communities and electrical energy demand in general.

I recently took part in a panel discussion titled: The Data Center Problem. As the lone person from a data center company, I felt defensive initially. But what I found as panel members exchanged thoughts and questions is that the different kinds and purposes of data centers are not well understood. It’s difficult for people to conceptualize what happens in data centers that drives energy demand, and data center providers alone can’t solve the challenge.

Inspired by that experience, I thought I’d attempt to provide education in each of those areas.

The Different Types of Data Centers

There’s an adage that goes: “Ask 10 people a question about X and you’ll get 10 different answers.” Given the topic at hand, I thought it would be fun to instead ask ChatGPT to give me 10 answers to the question: “What is a data center?”

Here’s the result, narrowed to the five that are most accurate:

- A facility that houses IT equipment: A data center is a specialized building or space that contains servers, storage systems and networking equipment used to store, process and manage data.

- A place to store and manage data: It’s a centralized location where organizations store their critical applications and data.

- The backbone of cloud services: Data centers are essential for hosting cloud computing services and delivering content over the internet.

- A server room on a larger scale: It’s essentially a large-scale version of a server room, often with multiple racks of servers and advanced cooling and power systems.

- A critical infrastructure for businesses: Data centers are where most modern businesses keep their mission-critical systems, including databases, websites and communication platforms.

Overall, good responses, especially if you are talking about data centers in broad terms. However, beginning to understand the synergy between energy, data and data centers calls for digging deeper. Let’s start with the differences between on-premises, colocation and hyperscale data centers.

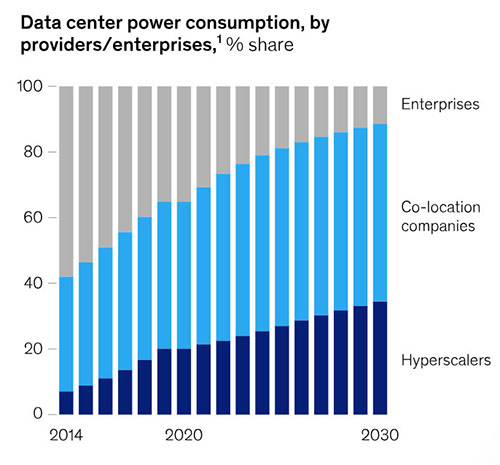

Figure 1. The share of energy used by digital enterprises and service providers is projected to shift as AI and other high-density workloads are moved into colocation, and the number of hyperscale facilities grows.

Source: SRG Research

On-Premises Data Centers

On-premises data centers are private, single-tenant facilities. When both enterprises and public sector organizations transitioned from manual processes to digital operations, they added “microcomputers” into the area of their facility where mainframe computers were housed. The enterprises operate and maintain these small clusters of computers, which are connected to users over a private, local-area network. Organizations can connect to clouds and other external resources leveraging virtual private networks and through the public internet.

An on-premises data center could be a closet, a room or entire floor of a building, depending on the past and present needs of the organization.

“On-prem” data centers are relevant to this post because they are one piece in a hybrid IT strategy (employed by 90% or more of enterprises, depending on the reference source) and because they currently account for approximately 25% of all the energy data centers as a whole consume (see Figure 1.) You’ll see that this percentage is projected to decrease while colocation energy consumption increases. The shift is happening because AI adoption and other factors are compelling enterprises to move resources into more efficient, purpose-built colocation facilities, as the density of their servers is rapidly rising. You will notice that hyperscale data centers account for less of the total than colocation datacenters, even though a hyperscale facility is usually much larger and typically has a higher energy capacity than a colocation facility. That’s because there are about 1,800 colo data centers and only 450 large/hyperscale data centers in the U.S. (source)

Colocation Data Centers

Colocation data centers originated as “carrier hotels,” facilities where network providers could connect with one another to hand off digital communications such as email, early-stage internet voice communications (VoIP) and teleconferencing, which soon became unified communications as a service. Today, we have Zoom and Teams for that.

How big are colocation data centers? It varies, of course, but a quick survey of CoreSite data centers shows that CH2 (Chicago) is 169,000 sq. ft., LA 2 (Los Angeles) is 424,000 sq. ft. and VA3 (Virginia) is 940,000 sq. ft. CoreSite often locates two or more data centers close to one another, creating a campus with the ability to interconnect customers with such low latency that it is as if they were in the same building.

ChatGPT’s answer #1 above explains what a colocation data center is (“a specialized building or space that contains servers, storage systems and networking equipment”) but doesn’t define what each party in the business relationship handles. That is a difficult concept for most people to grasp.

In essence, data centers are just buildings, albeit very specialized buildings, and you might be surprised to learn that most data center providers are real estate investment trusts (REITS). We own (sometimes lease) buildings and make them ideally suited for running IT infrastructure.

As you know, almost every activity in our day-to-day lives is touched by digital transformation, and colocation data centers are playing a central role, especially in AI adoption and development. Colocation data centers are multi-tenant businesses, as opposed to on-premises or hyperscale data centers, which often serve a single entity. For customers, this opens the opportunity to interconnect with relevant businesses and needed services providers, with low latency and high security. That said, each member in the ecosystem adds to the demand for energy, varying based on the density of compute best for their workloads.

Colocation providers are responsible for the power delivery systems, backup power solutions, interconnections, environmental controls and security systems. It’s the ecosystem of customers in the data center that drives energy consumption. Datacenter customers own the IT hardware, routers, switches, and servers, etc., which are vehicles for their business activities; and their end customers, you and I, drive demand and subsequently energy usage for those activities.

Colocation providers are keenly focused on power usage effectiveness (or PUE, an energy efficiency metric that calculates the ratio of the total amount of power used by a data center to the power used by customer network and computing equipment; lower is better). Enterprise facilities by and large don’t cultivate that area of expertise and are not purpose built to be as efficient. CoreSite’s average data center facility PUE is 1.36, while enterprises typically hover around 2.0 or higher.

Hyperscale Data Centers

Hyperscale data centers are not defined just by physical size but also the ability to rapidly, massively scale up compute resources. TechTarget describes them as follows: The typical facility is at least 10,000 square feet, according to IDC, with some of the largest facilities reaching hundreds of thousands or even millions of square feet. They are designed to house thousands of servers and provide a data transport scheme “that combines compute, storage and virtualization layers into a single computing environment.”

Google, Amazon, Microsoft and Meta are among the world’s leading hyperscale data center owners and operators. Companies with hyperscale requirements either build their own facilities or lease entire data centers or computer rooms from third-party data center providers. Most hyperscale facilities are single-tenant and are focused on providing specific services at "wholesale" scale.

Collaboration Amongst Stakeholders is Essential to the Energy Solution

Ultimately, data center energy use starts with you and me. Every time we use a smartphone, post to social media, stream music or a movie, we generate data and cause servers in data centers to process data – which results in energy being used by one or more data centers. Granted, each of those actions cause small amounts of energy to be used. But when you aggregate the number of users and look at activity, it’s jaw-dropping. One source I found, DataReportal, published findings developed from We Are Social and Meltwater metrics:

- There were 331.1 million internet users in the U.S. at the start of 2024, when internet penetration stood at 97.1%.

- The U.S. was home to 239.0 million social media users in January 2024, equating to 70.1% of the total population.

- A total of 396.0 million cellular mobile connections were active in the U.S. in early 2024, with this figure equivalent to 116.2% of the total population.

If no one streams Netflix, no one uses ChatGPT or saves files to the cloud or uses cloud compute services, the data center uses very little energy. Conversely, with each stream, data transfer to clouds, order from Amazon, more and more data is produced and energy consumed.

Figure 2. Even before GenAI, server rack density was increasing to support digital transformation.

Source: Cushman & Wakefield Research

This is where density comes into the picture. As computer chips have become more powerful, exponentially so with GPUs (graphics processing units, the chips that enable AI), they become more “dense.” GPUs have more than a billion transistors on a single chip. Density builds up as chips are integrated into a server and servers are combined into a rack.

On the plus side, we are getting more compute power from the same footprint as a lower-density deployment, and enterprises and/or end users have improved experiences – faster search results, speedier downloads, 4K HD streaming, and so on. The tradeoff? More power needed, more heat generated, significantly larger data transfers and the associated broadband fees.

You probably know of Moore’s Law, which posits that processing power doubles every 18 to 24 months. Koomey’s Law, on the other hand, states that the energy efficiency of computers doubles roughly every 18 months. Gains in efficiency and cost reductions can start with the chips and power boards. Infineon Technologies Division President Athar Zaidi frames up the potential savings by saying that engineers designing chips need to “think power-first” and the butterfly effect of incremental efficiency gains can be game-changing. “A one percent efficiency gets multiplied when it goes to a board, multiplied when it goes to a rack, multiplied when it goes to a data center, and to a cluster…you are talking about millions and millions of dollars in total cost of ownership at scale,” he explains.

It’s my opinion that data center providers, utility companies, chip manufacturers and energy regulatory bodies can work together to develop guidelines and incremental innovations which reduce the carbon footprint and encourage the mix of energy sources to progressively become cleaner, without creating changes to data center power systems that are economically burdensome. It truly is a marathon.

The Impact of Data Centers on the Economy and Your Life

According to McKinsey & Company, “Generative AI alone could add the equivalent of up to $4.4 trillion annually to the global economy, including up to $100 billion in the telecommunications sector, $130 billion in media and $460 billion in high tech. However, unlocking the promise of AI will take an entire ecosystem to support the technology.”

I’m pointing to these statistic not just to illustrate the astounding financial potential of the most disruptive technology in decades, but also to open discussion on how we are all both part of the problem and the solution.

Know More

Ready to talk about how CoreSite can help you bring AI into your infrastructure?

Contact us to start the conversation.

In the meantime, learn more about what our clients are doing with AI and download our Tech Brief to get insight on actualizing AI's potential for your business.