Three Not-So-Obvious Ways Artificial Intelligence Is Shaping the Data Center Industry

AI is impacting data centers in many ways, including creating broad industry challenges not related to density or data. How are data center providers responding to the demand for more facilities, improving operational support and implementing liquid cooling?

Digital transformation is driving spikes in data center demand. Consequently, primary markets are challenged to provide availability, secondary markets are gaining customers and REITs are accelerating data center construction.

Source: JLL

Editor's Note: This content originally appeared in InterGlobix Magazine in a series of "Interconnection Insights" articles by Chris Malayter.

In this article, I discuss three ways that artificial intelligence is impacting data centers. You might be surprised that I will not focus on data center performance as related to data transfer or workloads, but instead on how AI can help data center providers address broad industry challenges.

Taking on the Supply and Demand Imbalance

Do you know that many data center provider’s core business is being a real estate investment trust, or REIT? Data centers absolutely are hubs for interconnection, and where servers crunch data in hosted applications and private clouds. However, those things happen after a facility is built, a years-long process that involves everything from buying land to permitting, and construction to commissioning.

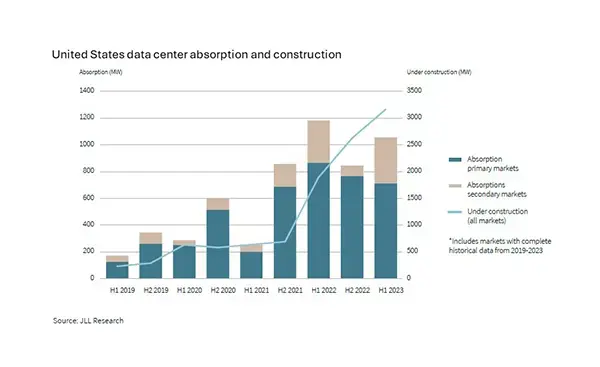

The amount of time required to complete data centers matters because the demand for data center services jumped in 2021, primarily due to accelerated cloud adoption and AI.1 That led to increased construction starts, availability constraints in primary markets and customers colocating in secondary markets. All signs point to even greater demand in the future.

Here’s one way AI can be used to accelerate data center construction: The first phase of building a data center includes developing a “30% spec,” which is a general drawing of the proposed facility that takes about 90 days to produce. A company that CoreSite engages for that service is training an AI model with construction data so the time for generating a 30% spec could be reduced to a handful of days instead of months.

Speeding colocation deployments is another element in responding to the demand for services. When designing a customer deployment, a computational fluid dynamics (CFD) analysis is made to determine the airflow required to control the environment within a computer room. The analysis can be very complex. Thanks to supercomputing and AI, a CFD can now be done in a matter of minutes instead of days. For our customers, that means reduced time to cloud and more optimized energy use, which contributes to savings for all customers in the data center.

Granted, each of these examples is not going to drastically cut the time to build and optimize data centers. But it all adds up. And while the construction industry can be a slow adopter of new technologies, 92% of construction companies surveyed in the Peak’s Decision Intelligence Maturity Index stated they have or intend to use AI in their workflows.2

Enabling More Effective Operational Support

Everyone is experiencing an increasing volume of digital noise – emails, texts, targeted marketing campaigns and even robocalls. Determining what requires action or response and what can be ignored is a conundrum, but it’s important because significant information could be part of the mix. Imagine multiplying the number of messages many times, only now they are alerts and alarms or reports from hundreds if not thousands of sources inside and outside of a data center. Welcome to a day in the life of a data center operations support (OSC) center professional.

OSC teams field a “metric ton” of messages every day. The sheer volume of messages is problematic, but the real challenge is correlating them to identify what is important. Conversational AI can help.

The fact is that IT infrastructures are extremely complex and “events” that slow or disrupt operations – network traffic bottlenecks, equipment failures, fiber cuts, even planned maintenance – happen on a regular basis. As a result, OSC teams field a “metric ton” of messages every day. They also need to investigate literally hundreds of warning lights on multiple dashboards that are triggered by power fluctuations, spikes in temperature or humidity in a data center. Missing something or responding slowly could result in lost data or revenue, poor user experiences or downtime causing expensive, far-reaching repercussions.

The sheer volume of messages is problematic, but the real challenge is correlating them to identify what is important. Accuracy and rapid detection are critical to understanding exactly what needs to be done to be more effective.

Conversational AI, enabled by natural language processing and machine learning, is being explored by CoreSite as a solution. In lieu of manually populating a custom database for future reference, an application developed with conversational AI could process alerts as well as voice, text and email queries related to network status and then correlate the messages with the information we get from network monitoring services. The application could also field internal data center events.

Once the AI model is trained, the application could infer why the event occurred and point the OSC technician to recommended actions. Keep in mind that there is a person receiving the inference/recommendation. While AI tools can facilitate working smarter, there’s no substitute for experience and intuition when it comes to being highly effective.

Implementing Liquid Cooling in High Density Deployments

The big splash AI recently made triggered a rise in discussion of liquid cooling as a means for coping with the heat produced by graphical processing units (GPUs) and tensor processing units (TPUs), the chips and special servers that are engines for AI.

Before delving into approaches to liquid cooling, I’ll remind you that high-density computing has been around for some time. Graphics rendering in content production, gaming and earlier types of AI are prime examples. “Traditional” air cooling has been capable of handling these and other heat-intensive workloads. Likewise, liquid cooling is a time-tested approach. Between 1970 and 1995, liquid cooling was used within mainframe systems, and for high-performance computing since 2010.3 However, only about 15% of data center operators use direct-to-chip liquid cooling, according to a 2022 survey by the Uptime Institute.4 Approaches include:

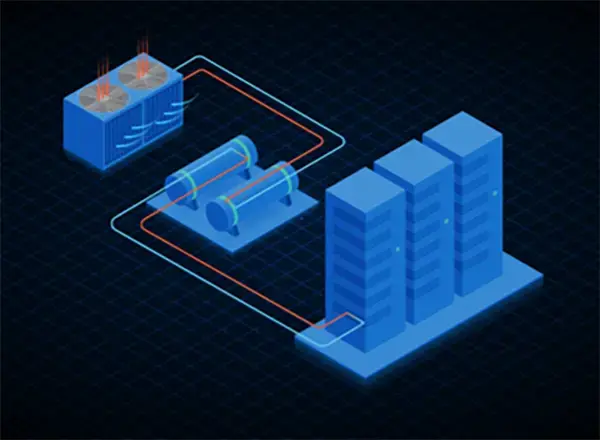

- Rear-door heat exchangers – A liquid heat exchanger replaces the back door of the rack. Fans blow hot air from servers through the exchanger, which circulates coolant to dissipate heat.

- Immersion cooling – Server components are submerged in a container of dielectric liquid and the heat is transferred to the coolant.

- Direct-to-chip liquid cooling – Cool liquid is circulated via tubes to cold plates directly adjacent to the hardware components.

The laws of thermodynamics apply to liquid cooling: water or dielectric liquids transfer heat more efficiently than moving air. There are other benefits for data centers, chiefly:

- Reduced energy use – Liquid cooling reduces dependence on fans and the pumps needed for liquid cooling require far less energy than fans used for conventional cooling.5

- Extended device life – Ensuring that equipment is operating within design specifications, which is easier to accomplish with liquid cooling, reduces the stress on chips and equipment.

- Noise reduction: Liquid cooling is quieter than other forms of cooling; fans and chillers are eliminated.

- Space conservation: Liquid cooling equipment takes up significantly less space than fans.

Staying Grounded in Light of Dazzling Possibilities

In a recent blog, Juan Font, President and CEO of CoreSite and SVP of American Tower, said:

“Evolving data center design is a reminder that AI is not an ethereal thing. AI is capital, concrete, steel, generators and HVAC systems. It’s raised floors, servers, switches and fiber optics. And it’s the people who work around the clock to achieve operational excellence…”

With so many lofty expectations for AI, it’s easy to lose sight of what enables it. Maybe the next time you hear about Chat GPT, autonomous vehicles, robotic surgeries or smart cities, you’ll remember that the path to an AI-transformed future runs through an AI-impacted data center.

Know More

In this brief video, CoreSite's Anthony White, Senior Manager, Data Center Operations, delivers practical advice in our series: "How to Optimize Your Data Center Deployment."

Click Here to Start Optimization!

References

1. North America Data Center Report, Record demand meets limited supply, JLL, 20232. Integrating Artificial Intelligence Into Construction Projects, RTInsights, 2023

3. The Evolution of Data Center Cooling: Liquid Cooling, Data Center Frontier, 2021

4. Direct liquid cooling: pressure is rising but constraints remain, Uptime Institute, 2022

5. Is the Data Center Industry Finally Ready to Take Liquid Cooling Seriously? Datacenters.com, 2023